Authors: Hilda Hadan, Laura Calloway, and Dr. L.Jean Camp

The handshake came first. Then the high-five, the fist bump and, more recently, the elbow bump. We greet each other in many different ways, but a warm handshake is more than a cordial power greeting. It is also a transmission method for diseases.

These days, COVID-19 makes human touch largely unacceptable. CDC recommends people be at least 6-feet away from each other to stay safe. But how can we make sure we maintain this social distancing? And how do we avoid people who have been exposed?

Many countries (e.g., Australia, UK, EU) have started adopting contact tracing apps to help manage the spreading of COVID-19. Contact tracing has been proven as a powerful tool to contain the outbreak of highly infectious diseases such as smallpox and tuberculosis. Public health authorities and government officials are hoping to use it to identify and isolate people potentially infected by COVID-19. But there are always concerns about privacy. For this reason, many officials, researchers, and individuals are refusing to accept the privacy risks, arguing that increase in contact tracing is not worth it.

In our recent online survey, we found that health risks associated with a public health crisis, such as a pandemic, did not make participants more willing to share data. Also, their privacy risk perceptions remained the same despite these new health risks. This result collides with the assertion by technologists, who of course trust their own companies. It proves that privacy by design is a necessary part of addressing COVID-19.

We know that scope creep is a risk for any projects and programs. Contact tracing apps record individuals’ movements, people they encounter, and send to a centralized database. Without a properly defined scope, it is effectively surveillance.

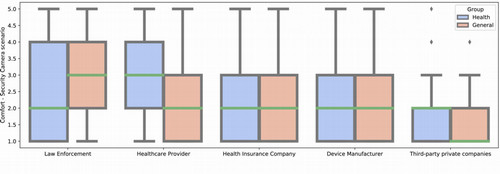

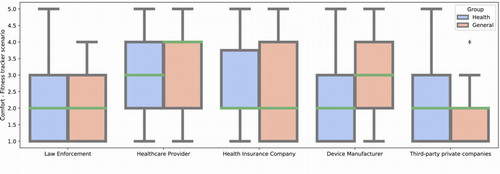

In our survey, participants’ concerns vary by device. Even preceding the current police violence, participants showed concerns about law enforcement having access to location data to Fitbits and cell phones. But there was little concern about police access to security cameras and greater concerns about health authorities having this. This result emphasizes the need for a clear scope with the corresponding limits on data collection, sharing, and retention.

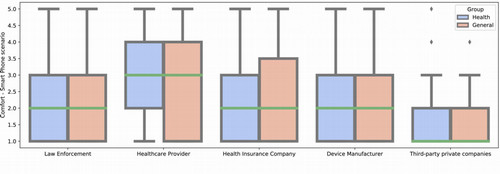

These charts show that participants’ comfort with sharing data varies by device, type, and recipient. Smartphone data results show people do not want to share more data with Apple, Google, Samsung, and other manufacturers. Healthcare providers are the only moderately trusted stewards. In terms of in-home security cameras, again people are comfortable sharing health data with their healthcare provider.

Before contact tracing, many countries rolled out “shelter-in-place” orders to help contain the spread of COVID-19. But what about people that have no safe place to stay? We found that 2.5% of our participants did not have a safe place to shelter in place. This response is likely underreported for two reasons. First, our survey was only available to participants with desktop computer access, which means that people experiencing homelessness or housing insecurity may have been unable to take it. Second, the desktop computer requirement meant that people experiencing domestic violence would need time alone to answer a question about safety at home.

The pandemic is a disaster by any measure. But it is not a new eternal normal. Treatments, vaccines, and progress will decrease the need for contact tracing. Unlike hand washing, contact tracing apps should not be a permanent change in personal practice.

This is a preliminary result of our survey data analysis. We have found people’s privacy perceptions vary by device type and data type in a public health crisis; and will quantify how perceptions differ between users and non-users of specific devices. We will also explore to what degree device usage correlates with privacy perceptions. Risk posture and risk perceptions are nuanced in their interaction with privacy, even in the face of a pandemic. In designing contract tracing apps, it is critical not to create unnecessary risks of surveillance and loss of information autonomy.

Acknowledgement:

This research was supported in part by the National Science Foundation under CNS 1565375, Cisco Research Support, and the Comcast Innovation Fund. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the US Government, the National Science Foundation, Cisco Comcast, nor Indiana University.